Access Control Lists in Solr/Lucene

This isn’t so much about access control lists in Solr or Lucene but more about access control lists in an inverted index in general. The problem is as follows. We have a large set of data that is access controlled. The access control is managed by users and they can individual items closed or open or anywhere between. The access control lists on the content, which may be files, or simply bundles of metadata is of the form 2 bitmaps, representing the permissions granted and denied, each pair of bitmaps being associated with a principal and the set of principal/bitmap pairs associated with each content item. A side complication is that the content is organised hierarchically and permissions for any one user inherit following the hierarchy back to the root of the tree. Users have many principals through membership of groups, through directly granted static principals and through dynamically acquired principals. All of this is implemented outside of the Solr in a content system. Its Solr’s task to index the content in such a way that a query on the content for an item is efficient and returns a dense result set that can have the one or two content items that the user can’t read, filtered out before the user gets to see the list. Ie we can tolerate a few items the user can’t read being found by a Solr query, but we cant tolerate most being unreadable. In the ACL bitmaps, we are only interested in a the read permission.

The approach I took to date was to look at each content item or set of metadata when its updated, calculate a set of all principals that can read the item and add those principals as a multivalued keyword property of the Solr document. The query, performed by a user computes the principals that the user has at the time they are making the query, and builds a Solr query that gets any document matching the query and with a reading principal in that set. Where the use of principals is moderate, this works well and does not overload either the cardinality of the inverted index where the reader principals are stored in Solr or the size of the Solr query. In these cases the query can be serviced as any other low cardinality query would be, by looking up and accumulating the bitmap representing the full set of documents for each reader principal in turn. The query then requires n lookups and accumulate operations, where n is the number of principals the user has, to resolve the permissions part of the query.

However, and this is the reason for this post, where this fails is where the cardinality of the reader principals becomes to high, or the number of principals that a user has is too high. Unfortunately those two metrics are connected. The more principals there are in a system, the more a user will need to access information, and so the reader principal mechanism can begin to break down. The alternative is just as unpleasant, where the user only has a single principal, their own. In those scenarios active management of ACLs in the content system becomes unscalable both in compute and human terms, which is why principals representing groups were introduced in the first place. Even if there were not limits to the size of a Solr query the cost of processing 1024 terms is prohibitive for anything other than offline processing.

One solution that has been suggested is to use a Bloom filter to represent the set of principals that the user has and test each indexed principal against this filter. If this is done as part of the query, as the result set is being created there is no gain over scanning all documents since the inverted index would not be used. There could be some benefit in using this approach once a potential set of documents is generated and sorted, since the cost of performing sufficient hashes to fill the appropriate set of bloom buckets is low enough that it could be used as a post query filter. I think the term in Solr is a Collector. In this scenario we are already verifying the user can read a content item or its metadata before delivering to the user, hence its acceptable to have a less that perfect set of pointers being emitted from Solr, provided that the set of pointers we retrieve is dense. We can’t afford to have a situation where the set of pointers is sparse, say several million items, and the only item the user can read is the last one. In that scenario any permissions checking performed without the benefit of Solr would be super expensive.

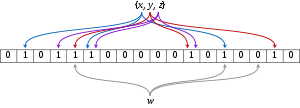

So, the Bloom filter applied within Solr has the potential to be able to filter most result sets rapidly enough to create a result set that is dense enough for more thorough checking. How dense does it need to be and how large does the bloom filter need to be ? That is an open-ended question, however if, on average you had to read 20% more elements than you returned that might not be excessive if results sets were generally limited to no more than the first 100 items. If that’s the case then 80% density is enough. Bloom provides a guarantee of no false negatives but a probability of a % of false positives, ie items the a Bloom filter indicates are present in a set, but which, are not. For classical Bloom filters 20% is an extremely high probability of false positive, certainly not acceptable for most applications. It has been reported in a number of places that the quality of the hash function used to fill the buckets of the filter is also of importance in the number of false positives since an uneven distribution of hashes over the number space represented by the Bloom bitmap will result in inefficient usage of that bitmap. Rather than doing the math which you will find on Wikipedia, knowing that all fast hashes are less than perfect, and being a pragmatist I did an experiment. In Apache Hadoop there are several implementations of the Bloom filter with 2 evenly distributed and efficient hash functions, Jenkins and Murmur2, so I have used that implementation. What I am interested in is how big a filter would I need to get 80% density to a set of results and how big would that bitmap need to be as the number of inputs to the bloom filter (the users principals) rises. It turns out, that very small bitmap lengths will give sufficient density where the number of input terms is small, even if the number of tested principal readers is high. So 32 bytes of Bloom filter is plenty large enough to test with < 20 principals. Unfortunately however, the cardinality of these bitmaps is too high to be a keyword in an inverted index. For example, if the system contained 256K principals, and we expected users on average to have no more than 64 principals we would need a bloom filter of no more than 256 bits to generate, on average 80% density. Since we are not attempting to index that bloom filter the cardinality of 2^^256 is not an issue. Had we tried to, we would almost certainly have generated an unusable inverted index. Also, that Bloom filter is constructed for each users query, we can dynamically scale it to suit the conditions at the time of the query (number of items in the system, and number of principals the user has). Real system with real users have more principals and sometimes users with more principals. A system with 1M principals that has on average 1024 principals per user will need a bloom filter containing about 8Kbits. Its certain that adding a 8Kbit token ( or a 1Kbyte[] ) as a single parameter to a Solr query circumvents the issue surrounding the number of terms we had previously, but it’s absolutely clear that the cardinality of 2^^8196 is going to be well beyond indexing, which means that the only way this will work is to post filter a potentially sparse set of results. That does avoid rebuilding the index.

From this small experiment I have some questions unanswered:

- Will converting a potentially sparse set of results be quick enough, or will it just expose another DoS vector?

- What will be the practical cost performing 100 (principals) x 20 (I was using 20 hashes) into an 8kbit filter to filter out each returned doc item?

- Will the processing of queries this way present a DOS vector?